A team from CSE is getting ready to attend the flagship annual conference of the ACM Special Interest Group on Data Communication on applications, technologies, architectures and protocols of computer communication. The week-long SIGCOMM 2015 takes place in London, UK, starting Aug. 17, and three CSE faculty members will attend – George Porter, Alex Snoeren and Geoffrey Voelker – as will PhD student Arjun Roy (PhD ’16) (at left). The reason? When Roy interned at Facebook, he worked on a project to measure their datacenter network. The results of the joint UC San Diego-Facebook investigation are to be published in a paper at SIGCOMM: "Inside the Social Network's (Datacenter) Network." Snoeren and Porter co-authored the article with grad student Roy and two colleagues from Facebook, Hongyi Zeng and Jasmeet Bagga.

A team from CSE is getting ready to attend the flagship annual conference of the ACM Special Interest Group on Data Communication on applications, technologies, architectures and protocols of computer communication. The week-long SIGCOMM 2015 takes place in London, UK, starting Aug. 17, and three CSE faculty members will attend – George Porter, Alex Snoeren and Geoffrey Voelker – as will PhD student Arjun Roy (PhD ’16) (at left). The reason? When Roy interned at Facebook, he worked on a project to measure their datacenter network. The results of the joint UC San Diego-Facebook investigation are to be published in a paper at SIGCOMM: "Inside the Social Network's (Datacenter) Network." Snoeren and Porter co-authored the article with grad student Roy and two colleagues from Facebook, Hongyi Zeng and Jasmeet Bagga.

As they point out in the paper, the co-authors note that "datacenter operators are generally reticent to share the actual requirements of their applications, making it challenging to evaluate the practicality of any particular design" of network fabrics to interconnect and manage traffic within large-scale datacenters. Most prior studies were based on Microsoft workloads, which may not be representative of other cloud services, so having access to some of Facebook's datacenters and workloads breaks new ground in showing how networking inside datacenters is handled. "While Facebook operates a number of traditional datacenter services like Hadoop, its core Web service and supporting cache infrastructure exhibit a number of behaviors that contrast with those reported in the literature," according to the paper's abstract. "We report on the contrasting locality, stability, and predictability of network traffic in Facebook's datacenters, and comment on their implications for network architecture, traffic engineering, and switch design."

First author Arjun Roy and his co-authors from UC San Diego and Facebook conclude that Facebook's datacenter network supports a variety of distict services that exhibit different traffic patterns that differ substantially from those in previously published studies. "The different applications, combined with the scale (hundreds of thousands of nodes) and speed (10-Gbps edge links) of Facebook's datacenter network result in workloads that contrast in a number of ways from most previously published datasets," they note. "Space constraints prevent us from providing an exhaustive account, [but] we describe features that may have implications for topology, traffic engineering, and top-of-rack switch design."

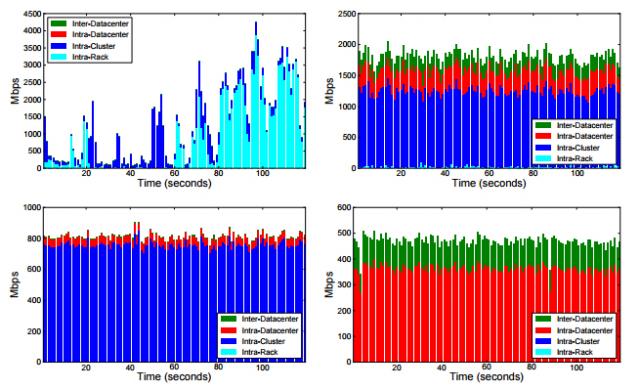

Given the interest in the UC San Diego-Facebook paper, SIGCOMM is also publishing a public review of the research, by Microsoft engineer Srikanth Kandula, who highlighted some "novel and interesting measurements." For example, more than 80 percent of traffic in a Hadoop cluster crosses racks (i.e., there was less rack 'locality' than in previous studies on other datacenters). Kandula also notes that "the overall network link utilization is quite small -- an average of less than 10 percent on all potential bottlenecks," noted Kandula, adding that "the other dominant application at Facebook is memcached-style request-response workload, which comprises primarily of small packets and has some specific traffic patterns." [Pictured at right: Per-second traffic locality by system type over a two-minute span: clockwise from top-left, Hadoop, Web server, cache follower, and leader.]

Given the interest in the UC San Diego-Facebook paper, SIGCOMM is also publishing a public review of the research, by Microsoft engineer Srikanth Kandula, who highlighted some "novel and interesting measurements." For example, more than 80 percent of traffic in a Hadoop cluster crosses racks (i.e., there was less rack 'locality' than in previous studies on other datacenters). Kandula also notes that "the overall network link utilization is quite small -- an average of less than 10 percent on all potential bottlenecks," noted Kandula, adding that "the other dominant application at Facebook is memcached-style request-response workload, which comprises primarily of small packets and has some specific traffic patterns." [Pictured at right: Per-second traffic locality by system type over a two-minute span: clockwise from top-left, Hadoop, Web server, cache follower, and leader.]

Former CSE Prof. George Varghese will also be at SIGCOMM 2015. He is co-teaching a tutorial on network verification. Varghese left UC San Diego to join Microsoft Research.

Read the UC San Diego-Facebook paper presented at SIGCOMM 2015.

View a public review of the UC San Diego-Facebook paper.