University of California San Diego researchers from the Jacobs School of Engineering Department of Computer Science and Engineering have proposed a novel rendering technique that could increase rendering efficiency and produce more visually appealing images.

PhD student Bing Xu – part of a joint team of computer scientists from the UC San Diego Center for Visual Computing (VISCOMP) and industry partner, Adobe – has developed a theoretical framework that sheds light on scene re-rendering with moving objects and material authoring. The paper, Residual Path Integrals for Re-Rendering, received a best paper award at the 2024 Eurographics Symposium on Rendering (EGSR) held recently in London.

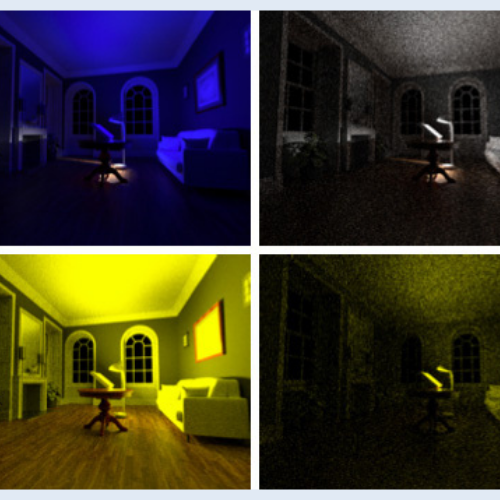

Conventional rendering techniques for scene authoring and gaming applications are primarily designed and optimized for single-frame rendering. Besides being expensive, these methods rely on a classical path integral which fails to account for minor differences – such as shadows from dynamic objects and shading from global illumination – in scenes that otherwise exhibit considerable coherence.

The team’s approach addresses these limitations, introducing a method for efficient incremental re-rendering of slightly modified scenes with dynamic objects. Their method formulates a residual path integral that characterizes the slight differences in light transport between the old and the new scene.

According to the team, their formulation demonstrates speed-ups over previous methods. It also brings unique insights to the re-rendering problem, paving the way for new types of sampling techniques and path mappings.

The Eurographics Symposium on Rendering (EGSR) recognizes work that shapes the future of image synthesis in computer graphics and related fields, such as human perception, mixed and augmented reality, deep learning, and computational photography.

In addition to first author Bing Xu, the paper’s coauthors include CSE Professor Tzu-Mao Li, Iliyan Georgiev of Adobe, PhD student Trevor Hedstrom, and CSE Professor Ravi Ramamoorthi, Director of VISCOMP and the paper’s senior author.

This work was funded in part by NSF grants 2105806, 2212085, 2210409, gifts from Adobe, Google, The Ronald L. Graham Chair, and the UC San Diego Center for Visual Computing.

By Kimberley Clementi