By Josh Baxt

A team of UC San Diego engineers – Rose Yu, Henrik Christensen and Nikolay Atanasov – has received an $600,000 grant from the Department of Defense’s Defense University Research Instrumentation Program (DURIP) to fund a deep learning cluster to store and process real-time sensor data from robotic vehicles and drones.

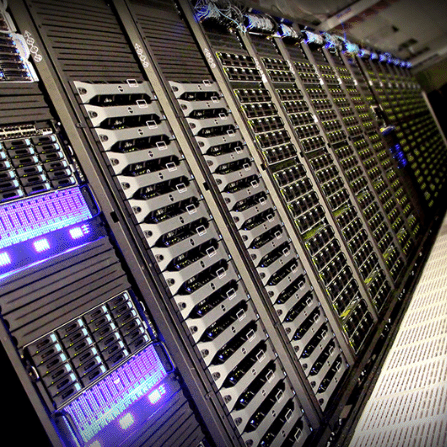

The project, called Computational Clusters for Robotic Deep Learning in Complex Spatiotemporal Environments, will purchase 200 graphics processing units (GPUs) and other computing infrastructure, which will be integrated into the San Diego Supercomputer Center’s Nautilus cluster. The added infrastructure will support efforts to analyze massive datasets from autonomous vehicles and develop better deep learning models to help them operate more effectively in dynamic environments.

“We want to unlock the potential of robotic deep learning in self-driving cars and drones,” said Yu, an assistant professor in the Department of Computer Science and Engineering (CSE), a faculty member in the Halıcıoğlu Data Science Institute and the principal investigator on the grant. “This will increase their computing power and give us major opportunities to design efficient algorithms that learn from complex sensing data.”

Drowning in Data

Robotic vehicles and drones are equipped with multiple sensors to help them navigate: video, light detection and ranging (LIDAR), inertial measurement units (IMUs) and others. Theoretically, these sensors can help them avoid people, buildings and other unpleasant collisions, but to get there, the researchers need to manage the data’s volume and complexity.

“I have two autonomous driving vehicles, basically golf carts, that we drive around campus,” said Christensen, Qualcomm Chancellor's Chair of Robot Systems and Distinguished Professor of Computer Science at CSE. “If we run all the sensors at max capacity, we would generate 155 terabytes of data every day.”

This deluge drives an array of challenges, such as how to store it, move it around, analyze it and harness it to develop better models. Christensen’s team has to throw out a lot of data because there’s just nowhere to put it.

These limitations have been a drag on progress, forcing engineers to make concessions. For example, the carts are programmed to wait three seconds at every intersection to ensure the coast is clear, which is problematic for both researchers and the unsuspecting drivers who get trapped behind them.

“This cluster is fundamental to getting more computing power so we can process more data and generate more situational awareness around the vehicles,” said Christensen. “We want to get closer to having the necessary power to make the carts more fluid in traffic.”

Navigating campus traffic is a great start, but Christensen’s team wants more. Self-driving cars must act independently, but they may also need driver assistance at times, requiring them to predict the future.

“The general consensus is that, for a driver to take over a robotic car, they will need around 30 seconds to understand the situation,” said Christensen. “In other words, we must develop a model that predicts what the environment is going to look like 30 seconds in the future.”

Look! Up in the Sky!

Autonomous drones may not have pedestrians to contend with, but they do encounter buildings, electric wires, wind, rain, birds and other drones. Atanasov, an assistant professor in the Department of Electrical and Computer Engineering, must teach his drones to understand this dynamic environment.

“You need models of how your sensors operate, how your robot moves and the environment around them,” said Atanasov. “In the past, these models have been designed by hand or based on physics principles, like Newton's laws. Now, we want to design models based on data from the actual environment.”

The flying is challenging on its own, but eventually autonomous drones must earn their keep, monitoring agriculture or fires or performing other jobs.

“If we can measure particle concentrations in the air, we can produce a very different type of pollution map than a visual camera,” said Atanasov. “Overall, we want to use multi-modal data to teach these drones to fly better on their own and to collect more environmental insights.”

Expanding the University’s Computer Infrastructure

The new cluster gives Christensen and Atanasov new tools to collect and store more data for thoughtful analysis. These units will also communicate with the robotic vehicles in real time, facilitating more fluid control.

At the same time, Yu and her students get to develop deep learning algorithms that can manage the complexity of the data and improve robotic performance.

“Nikolay is very good at building environmental models,” said Christensen. “Rose is very good at building on spatial/temporal models to see how the world is evolving. Together, we can develop new capabilities to make autonomous cars and drones much smarter.”

The new GPUs will support computer science education on many levels. Deep learning classes are running into data bottlenecks, which keep students from running their programs as fast as they would like. Hopefully, this new cluster will help alleviate these barriers.

“We want to expand the data center to support faculty and students,” said Yu. “I think this grant will be beneficial for everyone at UC San Diego.”