Better methods for computer vision and object detection are among the innovations presented by CSE and other robotics researchers from UC San Diego at the 2017 International Conference on Intelligent Robots and Systems (IROS). The five-day conference ends September 28 in Vancouver, British Columbia.

Christensen directs the

Contextual Robotics Institute.

“IROS is one of the premier conferences in robotics,” said CSE professor Henrik Christensen, director of the Contextual Robotics Institute at UC San Diego. “It is essential for our institute that we present key papers across manufacturing, materials, healthcare and autonomy. I am very pleased to see that we have a strong showing at this flagship conference.”

This year's conference focused on “friendly people, friendly robots.” Robots and humans are becoming increasingly integrated in various application domains, according to conference organizers. “We work together in factories, hospitals and households, and share the road,” organizers said. “This collaborative partnership of humans and robots gives rise to new technological challenges and significant research opportunities in developing friendly robots that can work effectively with, for, and around people.”

Two robotics-related papers out of CSE were presented at IROS 2017. They include:

- Faster Robot Perception Using Salient Depth Partitioning, by CSE Ph.D. students Darren Chan and Angelique Taylor with Prof. Laurel D. Riek

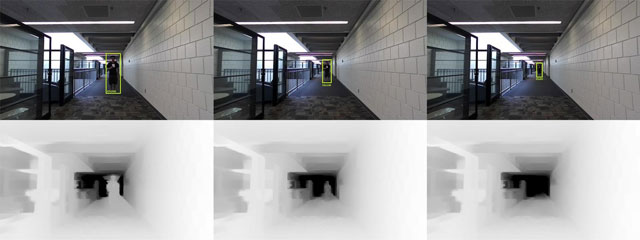

Better interactions between robots and people require improving computer vision and researchers led by CSE professor Laurel Riek are proposing the use of depth information to do so in one paper. Riek is the senior author on a paper that introduces Salient Depth Partitioning(SDP), a depth-based region cropping algorithm devised to be easily adapted to existing detection algorithms.

by up to 30%, with no discernible change in accuracy.

SDP is designed to give robots a better sense of visual attention,and to reduce the processing time of pedestrian detectors. In contrast to proposal generators, the team's algorithm generates sparse regions to combat image degradation caused by robot motion, making them more suitable for real-world operation.SDP is also able achieve real-time performance (77 frames per second) on a single processor without a graphics processing unit (GPU). "Our algorithm requires no training, and is designed to work with any pedestrian detection algorithm, provided that the input is in the form of a calibrated RGB-D image," according to the paper's abstract. "We tested our algorithm with four state-of-the-art pedestrian detectors (HOG and SVM, Aggregate Channel Features, Checkerboards, and R-CNN), and show that it improves computation time by up to 30%, with no discernible change in accuracy."

- Belief Tree Search for Active Object Recognition, by recent CSE alumnus Mohsen Malmir (Ph.D. '17) and Prof. Garrison W. Cottrell.

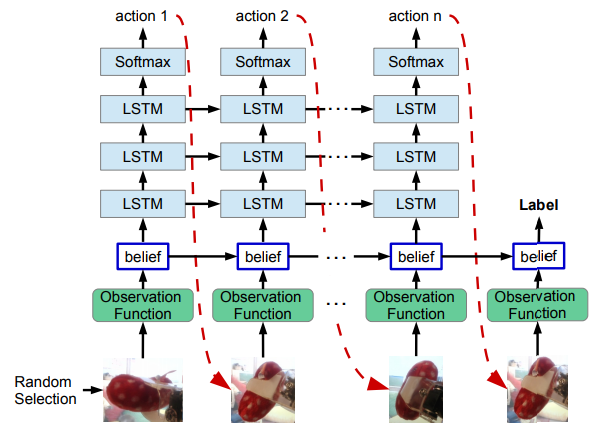

Separately, CSE professor Gary Cottrell is senior author on this paper spelling out a way to improve object-recognition processes. Active Object Recognition (AOR) has been approached as an unsupervised learning problem, in which optimal trajectories for object inspection are not known and to be discovered by reducing label uncertainty or training with reinforcement learning.

prediction using LSTM network. The observation

function is fixed for training the LSTM layers.

Such approaches suffer from local optima and have no guarantees of the quality of their solution, according to the paper's abstract. "In this paper, we treat AOR as a Partially Observable Markov Decision Process (POMDP) and find near-optimal values and corresponding action-values of training data using Belief Tree Search (BTS) on the AOR belief Markov Decision Process (MDP)," say Cottrell and Ph.D. student Mohsen Malmir. "AOR then reduces to the problem of knowledge transfer from these action-values to the test set." The computer scientists trained a Long Short Term Memory (LSTM) network on these values to predict the best next action on the training set rollouts and experimentally show that their method generalizes well to explore novel objects and novel views of familiar objects with high accuracy. They also compare this supervised scheme against guided policy search, and show that the LSTM network reaches higher recognition accuracy compared to the guided policy search and guided Neurally Fitted Q-iteration. They further looked into optimizing the observation function to increase the total collected reward during active recognition. In AOR, the observation function is known only approximately, so they derived a gradient-based update for the observation function to increase the total expected reward. In doing so, they showed that "by optimizing the observation function and retraining the supervised LSTM network, the AOR performance on the test set improves significantly."

Meanwhile, other Contextual Robotics Institute faculty members, including Electrical and Computer Engineering professor Michael Yip and Mechanical and Aerospace Engineering professor Michael Tolley, also had papers presented at IROS 2017. Click here to read the full Jacobs School of Engineering news release.